WikiQuestions

A large question dataset generated from Wikipedia sentences

A large question dataset generated from Wikipedia sentences

Systems based on machine learning such as Question Answering or Question Completion systems require large question datasets for training. However, large question datasets that are not restricted to a specific kind of question are hard to find. The WebQuestions (Berant et al., 2013) and Free917 (Cai & Yates, 2013) datasets both contain less than 10,000 questions. The SimpleQuestions dataset (Bordes et al., 2015) contains 108,442 questions, but the questions are limited to simple questions over Freebase triples of the form (subject, relationship, object). The 30M Factoid Question-Answer corpus (Serban et al., 2016) contains 30 million questions, however, these questions, too, are limited to simple Freebase triple questions.

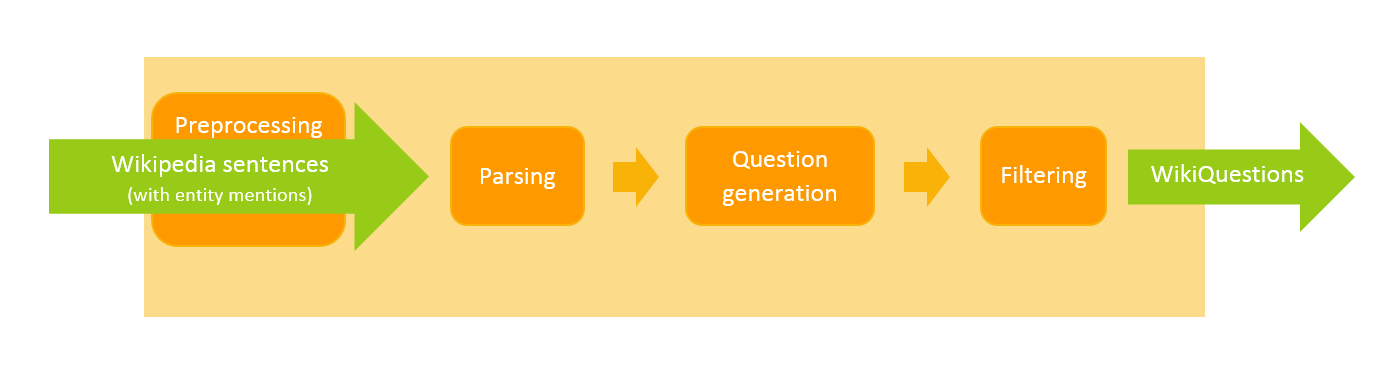

We introduce a question dataset containing 4,390,597 questions and corresponding answer entities that are generated by rephrasing Wikipedia sentences as questions. The rough pipeline of the question generation (QG) system is as follows: A Wikipedia dump with Freebase entity mentions is preprocessed by annotating entities with their types. The preprocessed Wikipedia dump is then parsed using a dependency parser. For each sentence, entities that fulfill certain grammatical criteria are selected as answer entities. A fitting WH-word is selected for an answer entity and various transformations are performed over the sentence to rephrase it as a question. Finally, the generated questions are filtered to avoid ungrammatical or otherwise unreasonable questions. The following section describes the system pipeline in more detail.

A vital part of the QG-system are the Freebase entity mentions in the input sentences. Freebase is a knowledge base containing semantic data in the form of entities and the relationships between them. Freebase stores a variety of information for each of its entities. Relevant for this work are the entity name and the types of an entity. For the QG-system, Freebase Easy (Bast et al., 2014) entity names are used. Freebase Easy names can at times differ from the original Freebase names in order to achieve unambiguousness. While Freebase names are not necessarily unique, Freebase Easy names are. This is achieved by adding disambiguating suffixes to Freebase names if two or more entities share the same name. Each entity can have a number of types. For example, the entity Lamborghini has the Freebase types Automobile Company, Business Operation, Consumer company, Employer, Literature Subject, Manmade Object, Organisation, Thing and Topic. These types are used to determine the correct question word as described in the following section. For the QG-system, each entity is annotated with exactly one of its types which we here refer to as the entity's category. Which type is selected as category depends on the overall frequency of each type as well as some hand-crafted selection rules. The selection of categories was part of a different work and is described in detail in section 4.2 of this thesis.

The input for the QG-system is a Wikipedia dump with recognized Freebase Easy entities. The input is preprocessed by annotating each entity with its category. Entity mentions are then presented in the format [entity name|entity category|original word in the sentence]. Additionally, flawed entity recognition is improved by merging identical entities that appear sequentially in the sentence (e.g. An [An_American_in_Paris|Composition|American] in [An_American_in_Paris|Composition|Paris] becomes the single entity [An_American_in_Paris|Composition|An American in Paris]). The following sentence from the preprocessed Wikipedia dump will be used as running example to explain the system pipeline.

In 1989 , at the age of eight , [Anna_Kournikova|Person|Kournikova] began appearing in junior tournaments , and by the following year , was attracting attention from [Spartak_Tennis_Club|unknown|tennis] scouts across the [World_TeamTennis|Organisation|world] .

The Wikipedia entity-sentences are parsed using the spaCy dependency parser. Entity mentions are replaced by the entity name which is treated by the parser as a single word. The parse is saved in CoNLL-2007 format with an additional column for entity mentions as shown in the example below:

address word tag head rel entity ... 10 Anna_Kournikova NNP 11 nsubj ("Anna_Kournikova", "Person", "Kournikova", 10) 11 began VBD 0 root None 12 appearing VBG 11 xcomp None 13 in IN 12 prep None 14 junior JJ 15 amod None 15 tournaments NNS 13 pobj None ...

For question generation, the parsed sentences are first preprocessed. Preprocessing steps include:

The example sentence after the preprocessing looks like this:

In 1989 , at the age of eight , [Anna_Kournikova|Person|Kournikova] began appearing in junior tournaments

The system then selects an entity within the sentence for which to ask the question. This answer entity is either the subject of the sentence (nsubj / nsubjpass), a direct object (dobj) or an object of a preposition (pobj). In addition to entity mentions, years or months can also be selected as answer entity since they are not marked as entities in the Wikipedia dump. For the example sentence, two entities are selected as answer entities. An object of a preposition and the subject:

1989

[Anna_Kournikova|Person|Kournikova]

Next, the question word is determined. Which question word is chosen depends on the category of the answer entity as well as its role in the sentence. E.g. if it is the subject of the sentence and its category is in a list of selected categories such as Person or Fictional Character, the question word is Who. The table below gives an overview of which question word is chosen when.

| nsubj / nsubjpass | dobj | pobj | |

|---|---|---|---|

| Who-category | Who | Who | - |

| What-category | What Which <type> | What Which <type> | - |

| Location | What Which <type> | What Which <type> | Where |

| Year / Month | - | - | When |

As can be seen in the table, for answer entities that fall into a What-category, Which <type> can also be chosen as question word. The type that is inserted for <type> is taken from the list of Freebase types of the answer entity. However, only certain handpicked types are inserted. The entity Lamborghini for example has the Freebase types Automobile Company, Business Operation, Consumer company, Employer, Literature Subject, Manmade Object, Organisation, Thing and Topic. However, a question that starts with e.g. Which manmade object would rarely be encountered in real life. Therefore, if Lamborghini is the answer entity for a question, aside from the question word What only the question words Which automobile company, Which business operation and Which organisation will be chosen.

The question words for the answer entities of the example sentence are:

1989 --> "When"

[Anna_Kournikova|Person|Kournikova] --> "Who"

In the following, various transformations are performed to turn the sentence into a question. If the answer entity is the subject of the sentence, these transformations are straightforward. First, every word that depends on the subject is removed from the sentence (e.g. adjectives). The subject itself is replaced by the selected question word and set as first word of the question. Then, everything that appears after the subject in the sentence is appended to the new question string except for some words that appear directly before the predicate and only make sense in the context of the previous sentences, e.g. also, however, then. The subject question of the example sentence is:

Who began appearing in junior tournaments ?

Finally, some post-processing is performed. This includes:

Since our example subject question does not contain any entity, it is filtered out.

For object questions, the transformations are more complex. First, as for subject questions, every word that depends on the object is removed from the sentence. In addition, if the object is the object of a preposition, this preposition is removed as well. The object itself is then replaced by the question word and set as first word of the question. The predicate is determined from the parse and a lemmatizer (WordNet Lemmatizer) is used to get the infinitive of the predicate. If no auxiliary verbs exists in the original sentence and the predicate is no form of to be, one of the auxiliary verbs do, does and did is chosen depending on the tense, person and number of the predicate. The auxiliary verb is appended to the question, followed by the subject and its dependencies. Next, possible additional auxiliary verbs are appended, as well as the infinitive of the predicate and negations, particles (e.g. shut down, hand over) and adverbial modifiers (e.g. publicly broadcast, recorded live) that belong to the predicate. Now, if the answer entity appears before the predicate in the sentence, everything following the predicate is appended to the question. This results in the following object question for our example sentence:

When did [Anna_Kournikova|Person|Kournikova] begin appearing in junior tournaments ?

If the answer entity appears after the predicate, everything between the predicate and the answer entities head is appended. That way, if the sentence structure of our example sentence had been different, the outcome would still be the same:

[Anna_Kournikova|Person|Kournikova] began appearing in junior tournaments in 1989 at the age of eight.

--> When did [Anna_Kournikova|Person|Kournikova] begin appearing in junior tournaments ?

Finally, clausal complements (e.g. He reported that they had left, She supported what they did), open clausal complements (e.g. He considered him honest, She likes to swim) and prepositional phrases (in 2018, at the Eiffel Tower) are appended if they are not already part of the question. To finalize the questions, the same post-processing as for subject questions is performed.

Using this system, 44,045,711 questions are generated from 140,879,948 Wikipedia sentences.

Once the questions are generated, several filters are applied to improve the quality of the final questions. The following table shows the filter criteria together with the number of questions that are discarded if solely the corresponding filter is applied over all generated questions.

| Filter | # discarded questions | % discarded questions |

|---|---|---|

| Freebase-Connection-Filter Entities in the question don't have a connection in Freebase (directly or via one mediator entity) | ~16,375,174 | ~36.7% |

| Uppercase-Filter Question contains uppercase letters outside of entity mentions (often means wrong entity recognition) | 15,126,563 | 33.9% |

| Lowercase-Filter Question contains entities whose original word consists only of lowercase letters (often means wrong entity recognition) | 14,491,569 | 32.5% |

| MID-Question-Filter An entity name in the question can't be mapped to any Freebase MID | 11,070,738 | 24.8% |

| 2-Entities-Filter Question contains more than two entities | 8,547,368 | 19.1% |

| MID-Answer-Filter Answer entity name can't be mapped to any Freebase MID | 5,097,149 | 11.4% |

| It-Answer-Filter Original word of the answer entity is "it" (often means wrong entity recognition) | 3,562,766 | 8.0% |

| Answer-in-Question-Filter Answer entity appears in the question | 3,551,238 | 8.0% |

| Comma-Filter Question contains a comma | 2,912,552 | 6.5% |

| Context-Word-Filter Question contains a word that needs the context of previous sentences in order to make sense (e.g. they, there, these, ...) | 1,989,359 | 4.5% |

| It-Question-Filter Original word of an entity in the question is "it" | 1,814,137 | 4.1% |

After the filter process, the question dataset consists of 4,390,597 questions.

python3 annotate_entitysentences.py < <wikipedia_dump_with_entity_mentions> > <preprocessed_wikipedia_dump>

The original Wikipedia dump with entity mentions that was used as input data can be found at

/nfs/raid5/buchholb/semantic-wikipedia-data/entitysentences.txt

An already preprocessed Wikipedia dump can be found at

/nfs/students/natalie-prange/wiki_files/preprocessed_entitysentences.txt

python3 spacy_parse.py < <preprocessed_wikipedia_dump> > <parsed_wikipedia_dump>

The parsed Wikipedia dump can be found at

/nfs/students/natalie-prange/qg_files/parses/entityparse.txt

python3 qg.py < <parsed_wikipedia_dump> > <generated_questions>

The dataset of all generated questions can be found at

/nfs/students/natalie-prange/qg_files/generated_questions/gq_2019-02-25.txt

python3 filter_questions.py < <generated_questions> > <filtered_questions>

The final WikiQuestions dataset can be found at

/nfs/students/natalie-prange/qg_files/filtered_questions/fq_2019-02-25.txt

Click the button to inspect a random question from the dataset.

The entire corpus can be found here.

Despite the efforts conducted in this work to improve the quality of the entity recognition (via entity merging and filters), faulty entity recognition is still one of the biggest sources of errors in the generated questions. In the following sentence for example, the word Bulgaria should have been recognized as the country Bulgaria and not as Prime Minister of Bulgaria

What had an unstable party [Parliamentary_system|Form of Government|system] ? [Prime_Minister_of_Bulgaria|Government Office or Title|Bulgaria]

However, it is not always as easy as just taking the entity that exactly matches the original word. In the next example, the word Alexander should not be recognized as Wars of Alexander the Great nor as Alexander (the movie) but as Alexander the Great.

Who did [Wars_of_Alexander_the_Great|Event|Alexander] blame for his father 's death ? [Darius_III|Person|Darius]

Due to the current implementation of the QG-system, there is exactly one answer provided for each question. However, questions with a predicate in plural form exist, since the number of the sentence predicate is never changed. That means that from the original sentence:

[Sofia|Location|Sofia] and [Plovdiv|Location|Plovdiv] are [Bulgaria|Location|country] 's air travel hubs .

the question

Which cities are [Bulgaria|Location|country] 's air travel hubs ? [Sofia|Location|Sofia]

is generated. If only the questions are needed for training, as is the case for Query Auto-Completion systems, this of course is not an issue. For other applications, such as Question Answering, this can be a problem. Possible solutions are to transform all question predicates into their singular form. This, however, limits the variety of the questions in the dataset. A better solution would be to try to include all answers that are given in the sentence by checking for conjunctions.

In order to filter out ungrammatical questions more reliably, one could introduce a filter that uses a language model to compute the probability of the question. Questions with a low probability can be filtered out. Similarly, one could use a parser that yields a parse probability for a question and filter out questions with a low probability.

Berant, Jonathan, et al. "Semantic parsing on freebase from question-answer pairs."

Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing. 2013.

Cai, Qingqing, and Alexander Yates. "Large-scale semantic parsing via schema matching and lexicon extension."

Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Vol. 1. 2013.

Bordes, Antoine, et al. "Large-scale simple question answering with memory networks."

arXiv preprint arXiv:1506.02075 (2015).

Serban, Iulian Vlad, et al. "Generating factoid questions with recurrent neural networks: The 30m factoid question-answer corpus."

arXiv preprint arXiv:1603.06807 (2016).

Bast, Hannah, et al. "Easy access to the freebase dataset."

Proceedings of the 23rd International Conference on World Wide Web. ACM, 2014.